What are ITLs

Ever wondered how Oracle locks rows on behalf of transactions? In some RDBMS vendor implementations, The manager maintains information on which row is locked by which transaction. This works great in theory but when pretty soon the lock manager becomes a bottleneck as each transaction must wait to get a lock from the manager and then again wait to release the lock. This of course goes against the scalability of the applications. In fact, application developers of some RDBMS products despise holding locks for a long time, and often times resorting to a full table lock when all its needed is to get a few rows locked. This creates further waits and scalability suffers.So how is that different in Oracle? For starters there is no lock manager. When a row is locked by a transaction, that information is placed in the block header where the row is located. When another transaction wishes to acquire the lock on the same row, it has to travel to the block containing the row anyway, and upon reaching there, it can easily identify that the row is locked, from the block header. There is no need to queue up for some single resource like a lock manager. This makes applications immensely scalable.

So what portion of the block header contains information on locking? It is a simple data structure called Interested Transaction List (ITL), a linked list data structure that maintains information on transaction address and rowid. ITL contains several slots for transactions. When a row in the block is locked for the first time, the transaction places a lock in one of the slots with the rowid of the row that is has locked. In other words, the Transactions is Interested in the row, hence the name Interested Transaction List. When the same transaction or another one locks another row, the information is stored in another slot and so on and so forth.

So how many slots are available? During the table creation, the parameter INITRANS parameter defines how many slots are initially created in the ITL. When the transactions exhaust all the available slots and a new transaction comes in to lock a row, the ITL grows to create another slot. But to what extent can it grow? It can grow up to the number defined by the MAXTRANS parameter of the table, provided there is space in the block.

What is an ITL Wait

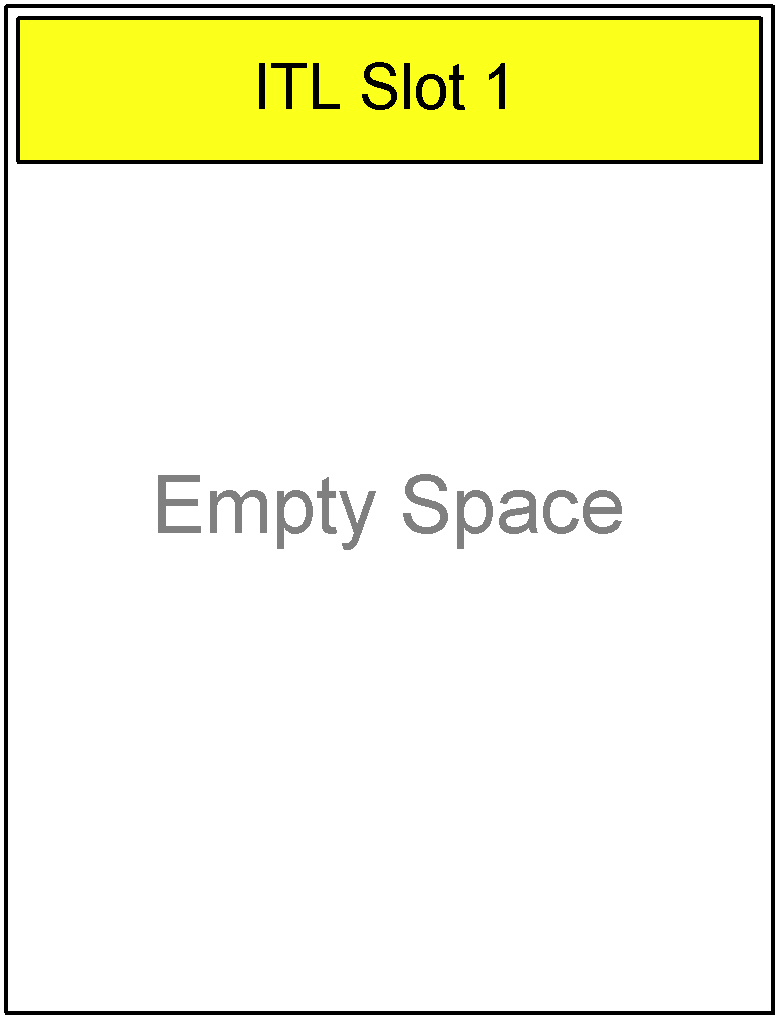

If a block is so packed that the the ITL cannot grow to create a free slot, then the transaction that needs to lock a row waits, till a slot becomes available. This wait is termed as ITL waits and can be seen when from v$session_wait is the session is waiting. Let's see these description in action. Assume our table has INITRANS of 1 and MAXTRANS 11. A typical data block right after the creation of the table will look like this.

Since the INITRANS is 1, there is only one slot for the ITL. The rest of the block is empty. Now we inserted two rows into the table. These will go into this block and the block will look like the following.

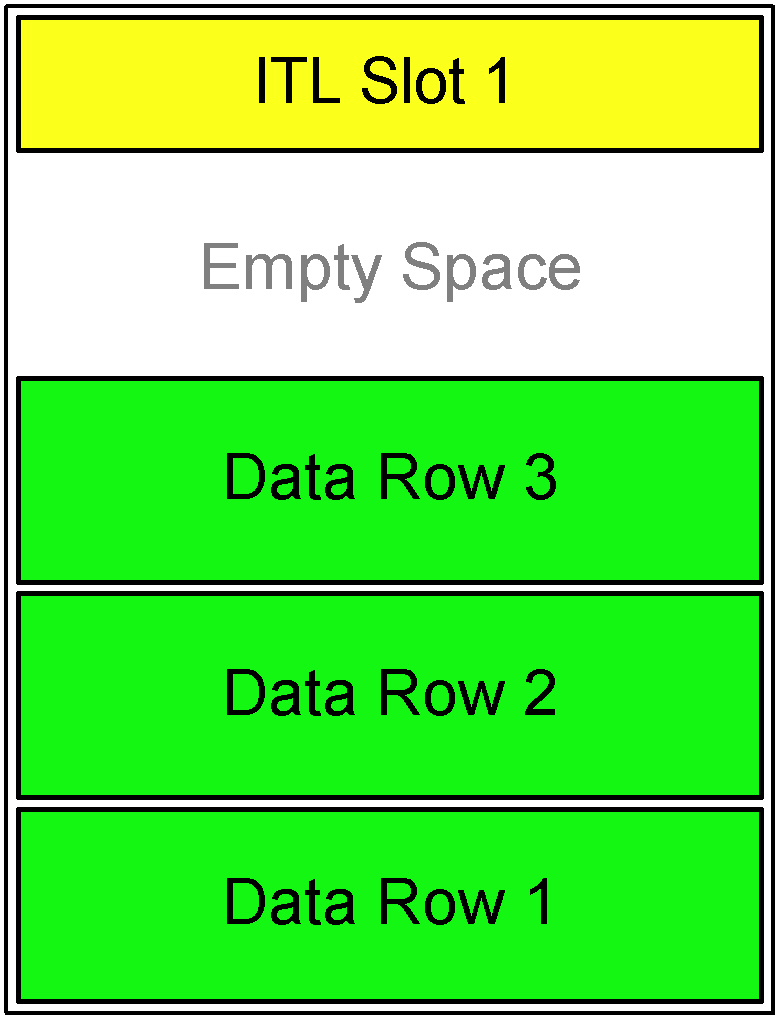

Note how the empty space is reduced. A this point a transaction, Txn1 updates the row 1, and does not commit. This locks the Data Row 1; and the transaction places the lock in the slot in the ITL as follows.

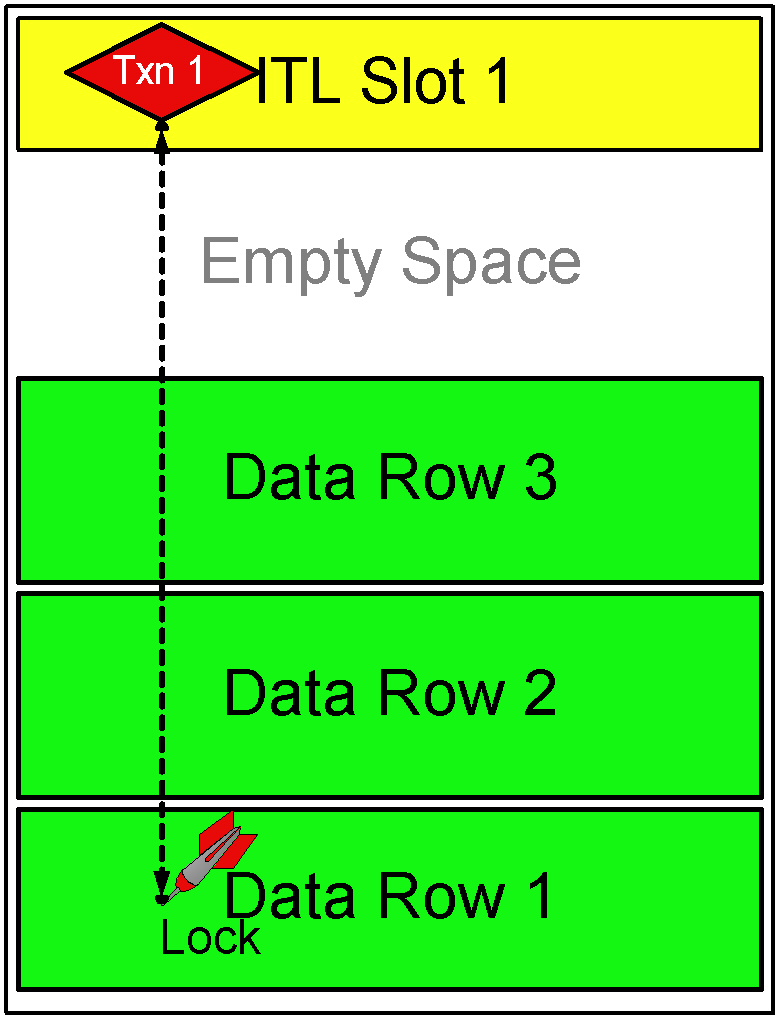

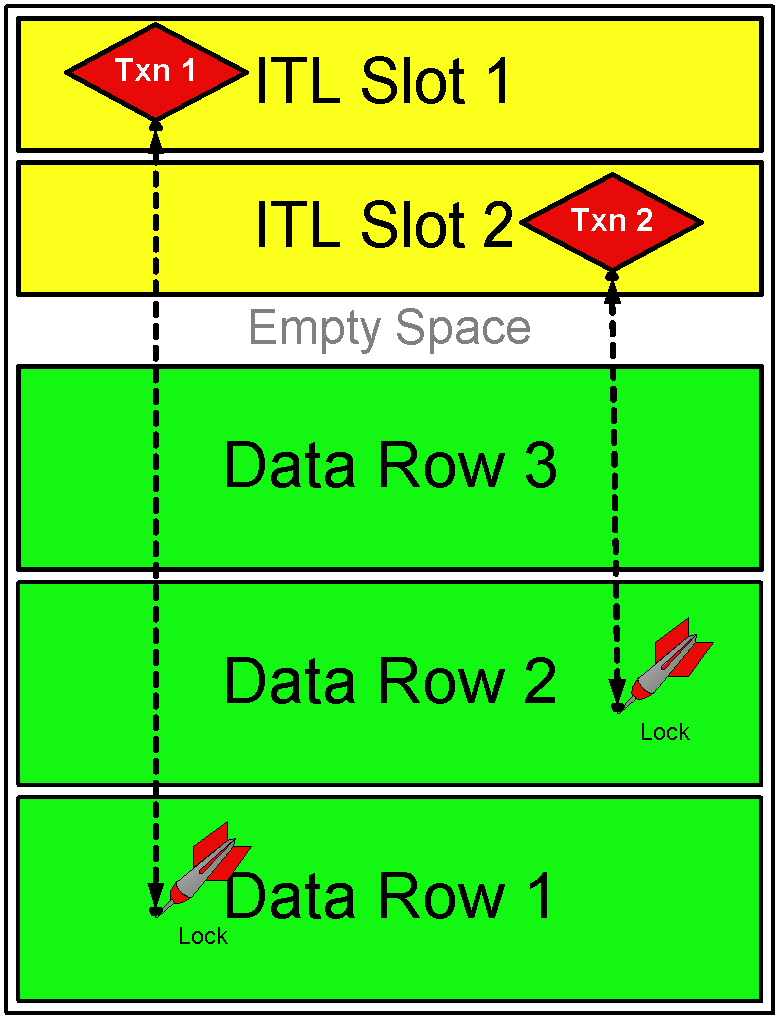

Then another transaction Txn2 updates the row 2 and wants to lock the row. However there are no more slots in the ITL available to service the transaction. The MAXTRANS entry is 11 meaning the ITL can grow up to 11 slots; and the block has empty space. So ITL can grow by another slot and Slot # 2 is created and allocated to Txn2 as in the following diagram.

Now the emplty space in the block is severely limited, and it will not be able to fit one ITL slot. If at this time another transaction comes in to update the row 3, it has to have a free slot in the ITL. The MAXTRANS is 11 and currently only two slots have been created, so another one is possible; but since there is no room in the block to grow, the slot can't be created. Therefore the Txn3 has to wait till either of the other transactions rolls back or commits and the slot held by it becomes free. At this time the session will experience ITL waits wait event as seen from the view V$SESSION_WAIT.

How to Reduce ITL Waits

The primary cause of ITL waits is the unavailability of free slots in the ITL. These can be due to- low setting of the MAXTRANS, which places a hard limit on the number of transactions that can have locks on a block

- or, the block is so packed that there is no room for the ITL to grow OR

- or both

The other option is to making sure the data is less packed so that ITL can grow enough to accommodate the surges in ITL. This can be done by increasing PCTFREE, increasing FREELISTS and FREELIST GROUPS parameters for a table. This will make a block hold less data and more room for the ITL to grow. As a direct result of the reduction in packing, the table will experience less buffer busy waits event, increasing performance.

What INITRANS is Optimal?

Now that you have set up the INITRANS for a segment, how do you make sure that the setting is correct. The best answer will be found in Oracle 9i Release 2 provided Segment Level Statistics. However, that is only available in 9iR2. In other versions it's slightly tricky. You have to rely on V$LOCK where lock_type = 'TX' and share_type = 4 to find out the possible ITL wait scenarios; but it is never accurately predicted.On the other side of the coin, how do you know if the INITRANS setting is too high and just being wasted? This is possible by using a few random block dumps from the segment in question. First, find out the header file# and header block# of the segment by issuing the query:

SELECT HEADER_FILE, HEADER_BLOCK

FROM DBA_SEGMENTS

WHERE OWNER = '...'

AND SEGMENT_NAME = '...';

Use the output of the query to do a block dump of the header block.

ALTER SYSTEM DUMP DATAFILE <file#> BLOCK MIN <block#> BLOCK MAX <block#>;

This will produce a trace file in the USER_DUMP_DESTINATION directory. Open the trace file and find out the section on extent control as follows.

Extent Control Header

-----------------------------------------------------------------

Extent Header:: spare1: 0 spare2: 0 #extents: 1 #blocks: 10

last map 0x00000000 #maps: 0 offset: 2080

Highwater:: 0x02011f87 ext#: 0 blk#: 0 ext size: 10

#blocks in seg. hdr's freelists: 0

#blocks below: 0

mapblk 0x00000000 offset: 0

Unlocked

Map Header:: next 0x00000000 #extents: 1 obj#: 53689 flag: 0x40000000

Extent Map

-----------------------------------------------------------------

0x02011f87 length: 10

Find out the real number of blocks for the segment from dba_segments as follows

SELECT BLOCKS FROM DBA_SEGMENTS WHERE OWNER = '...' AND SEGMENT_NAME = '...';

Say, this returns 12 and the #blocks above shows 10; it means the first 2 blocks are header block the data starts at 3rd block onwards. Take a dump of the third block. The third block is obtained by adding 2 to the header block# obtained above.

ALTER SYSTEM DUMP DATAFILE <file#> BLOCK MIN <block#> BLOCK MAX <block#>;

This will produce another trace file in USER_DUMP_DEST directory. Open the file and locate the follwoing section.

buffer tsn: 8 rdba: 0x02011f88 (8/73608)

scn: 0x0000.389b8d81 seq: 0x01 flg: 0x04 tail: 0x8d810601

frmt: 0x02 chkval: 0x2ef5 type: 0x06=trans data

Block header dump: 0x02011f88

Object id on Block? Y

seg/obj: 0xd1ad csc: 0x00.389b8d81 itc: 4 flg: - typ: 1 - DATA

fsl: 0 fnx: 0x0 ver: 0x01

Itl Xid Uba Flag Lck Scn/Fsc

0x01 0x0003.003.000024cc 0x00804067.050a.13 C-U- 0 scn 0x0000.389b304e

0x02 0x0007.010.00002763 0x00801f49.0453.01 C--- 0 scn 0x0000.389b2628

0x03 0x0002.00a.000025d5 0x00804d42.04b2.25 C--- 0 scn 0x0000.389b2811

0x04 0x0006.006.00002515 0x00800962.03c8.18 C-U- 0 scn 0x0000.389b3044

This shows several information on the block dump, especially the ITL section just above. This table has an INITRANS entry of 4, so there are 4 lines, one each per the ITL. In the Flag column above the flag -U- mans the ITL was used. In this case only two of the ITLs were used. The other two were never used. However, this is for this block only. By selecting block dumps from other randomly selected blocks you could have an idea how many ITLs are actually used. Then you may decide to reduce the INITRANS.

Automatic Block Management in Oracle9i

In Oracle9i, this is somewhat changed due to the introduction of the Automatic Block Management (ABM) feature. This is specified at the tablespace level in the storage parameter as SEGMENT SPACE MANAGEMENT AUTO. For instance, the tablespace TS1 can be created as

CREATE TABESPACE TS1

DATAFILE '...'

EXTENT MANAGEMENT LOCAL

SEGMENT SPACE MANAGEMENT AUTO;

The last line does the magic. In the ABM mode, Oracle maintains a bitmap for each segment with the information on the block. A bitmap is a data structure with a bit representing each bock. When a block becomes available for insert, the information is made available simply by setting the corresponding bit in the bitmap rather than freelists. So what des this have to do with ITL Waits. Unfortunately, the very cause of ITL waits is not due to the freespace management, rather due to the unavailability of it. So you still have to look for ITL Waits and correct them using INITRANS and MAXTRANS. In fact the problem becomes exacerbated due to the fact the block becomes quite packed due to an efficient space management system and that leads to lack of space for ITL. You can prevent this by keeping a large INITRANS for the segment.